Pi Music Synthesizer

Zifu Qin (zq72) | Zekun Chen (zc277)

ECE 5725 | May 20, 2022

Demonstration Video

Introduction

In the final project of ECE 5725, the team built a Raspberry Pi 4 based embedded music synthesizer for music production. This device supplies users various input and instrument sources including a virtual drum set, a virtual piano, a physical piano MIDI keyboard, and a USB microphone input. Both the virtual drum set and the virtual piano are developed on the PiTFT touchscreen, so users can play the virtual drum set and the virtual piano by touching the PiTFT. The physical piano MIDI keyboard and the USB microphone are connected to the Raspberry Pi 4 by the USB ports. By some linux commands, the MIDI keyboard can be played and make sounds, and the vocals can be recorded and stored in wav files by the USB microphone. The team also built a GUI on PiTFT supplying users various functions such as concatenation, stack, music tone processing, reverb, and echo for post production. All the software was written in a Python file. All the elements shown on the PiTFT were developed using the Pygame module. And all the rest functions were developed using other Python modules such as Numpy, Pyaudio, and Scipy.io.wavfile.

Figure 1. Pi Music Synthesizer.

Project Objective:

The goal of our final project is to build a Raspberry Pi 4 based embedded music synthesizer for music production. This device owns various input and instrument sources including a virtual drum set, a virtual piano, a physical piano MIDI keyboard, and a USB microphone input. And each of these instruments and microphone can be played, recorded, edited, and synthesized to allow users to perform music production on this embedded system.

Design and Testing

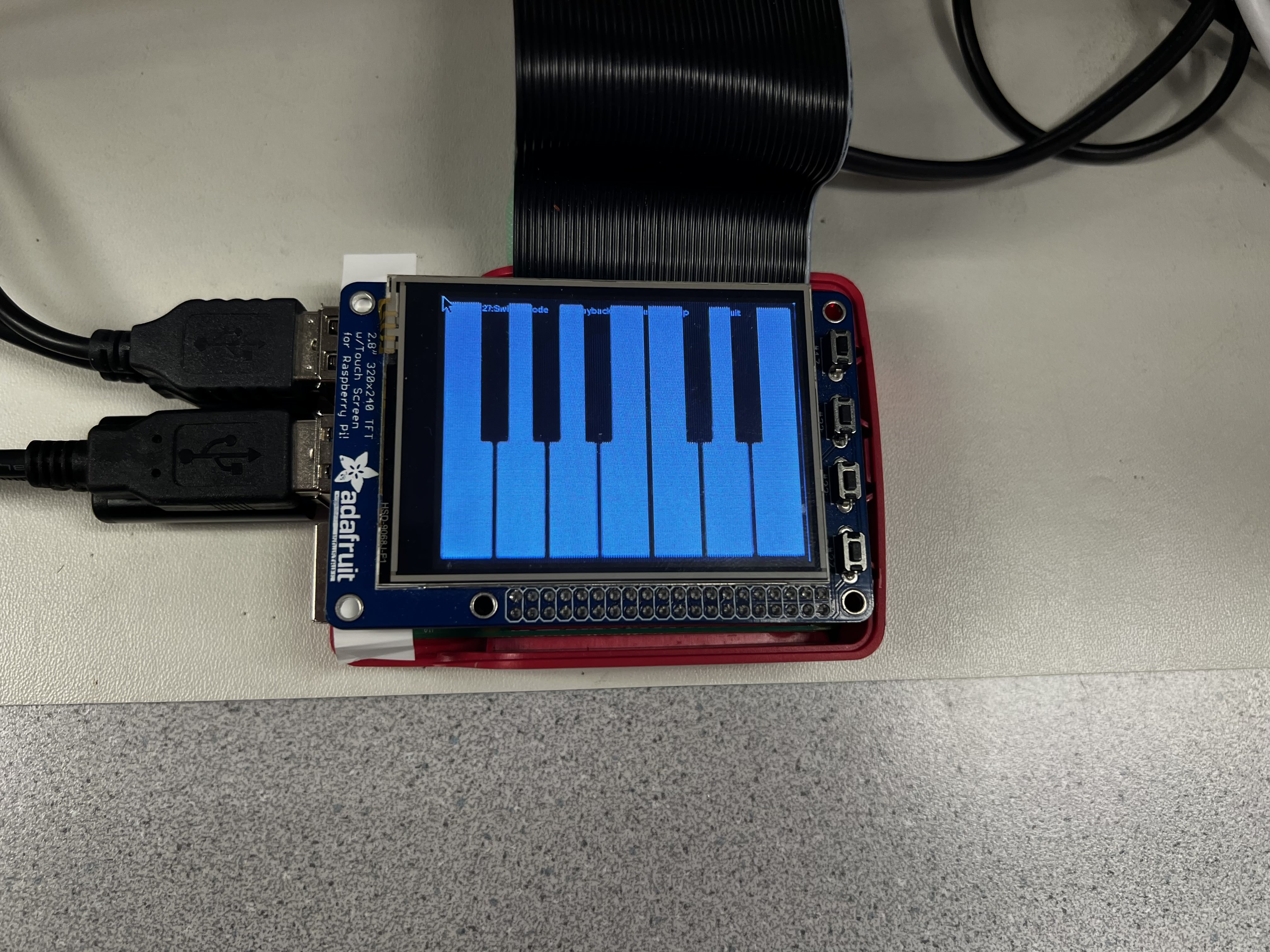

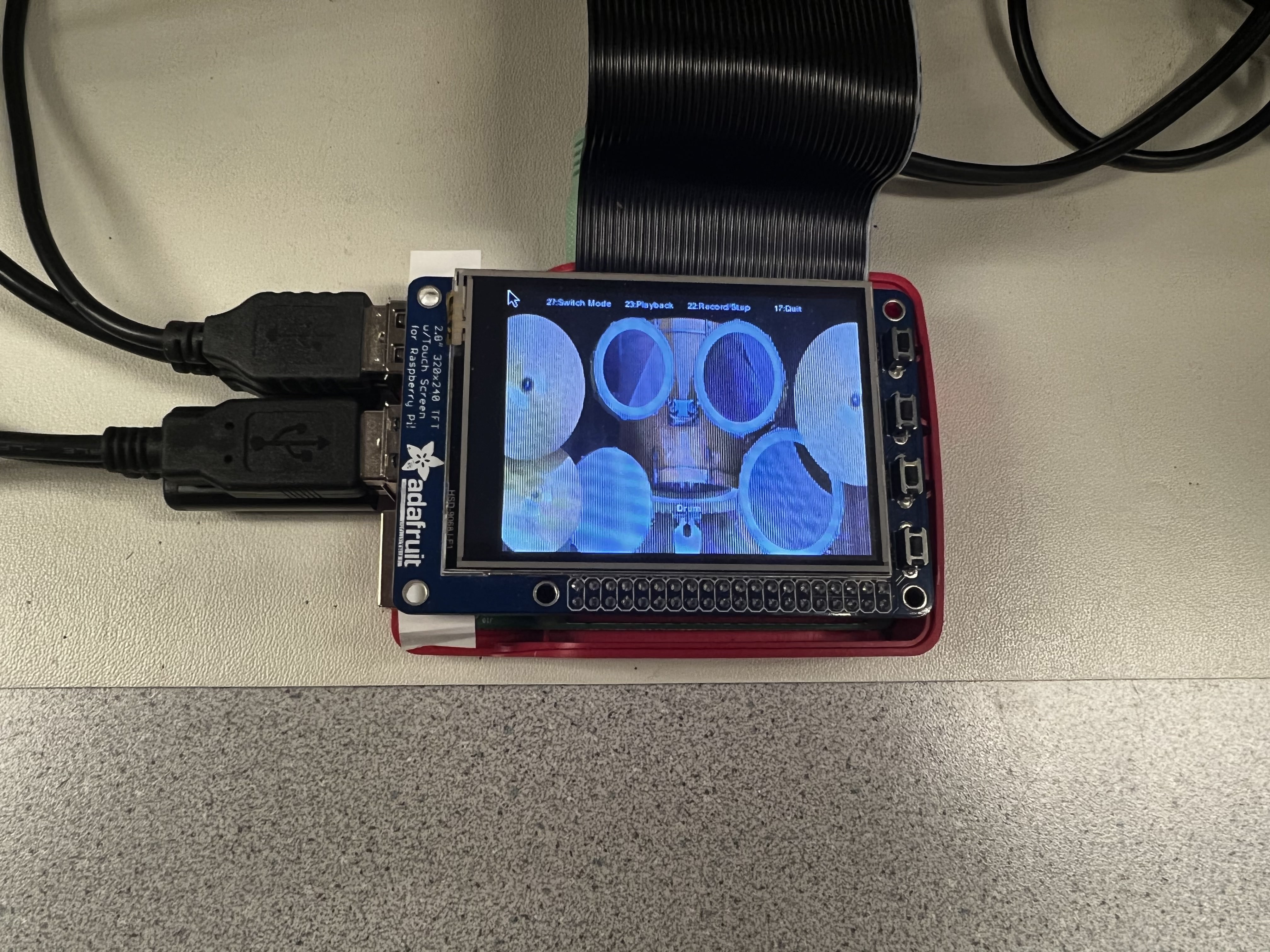

We started our implementation of virtual piano (shown in Figure 2) and drums (shown in Figure 3) on the touchscreen. After carefully measuring the pixel value of the size of a piano key and human finger sizes, we placed an octave of the piano (7 white keys + 5 black keys) on the Pi-TFT screen. On the other screen we placed 7 different drums separated on screen with different functions. Physical buttons are configured as GPIO pins to allow users switching back and forth between different instruments.

Figure 2. The virtual piano.

Figure 3. The virtual drum set.

Pi-TFT will take user touch input location into a dictionary with the mapping to frequencies of different tones. We will utilize the Python sound generation library PyAudio to synthesize the sound of piano. We referenced frequencies of piano tones online [4]. By stacking multiple sine waves together, we are able to generate tones mimicking piano outputs. For drum tones, it is harder to generate with sine waves of different frequencies. For the scope of this project, we will use pre-recorded drum sound samples as the sound source of our virtual drum.

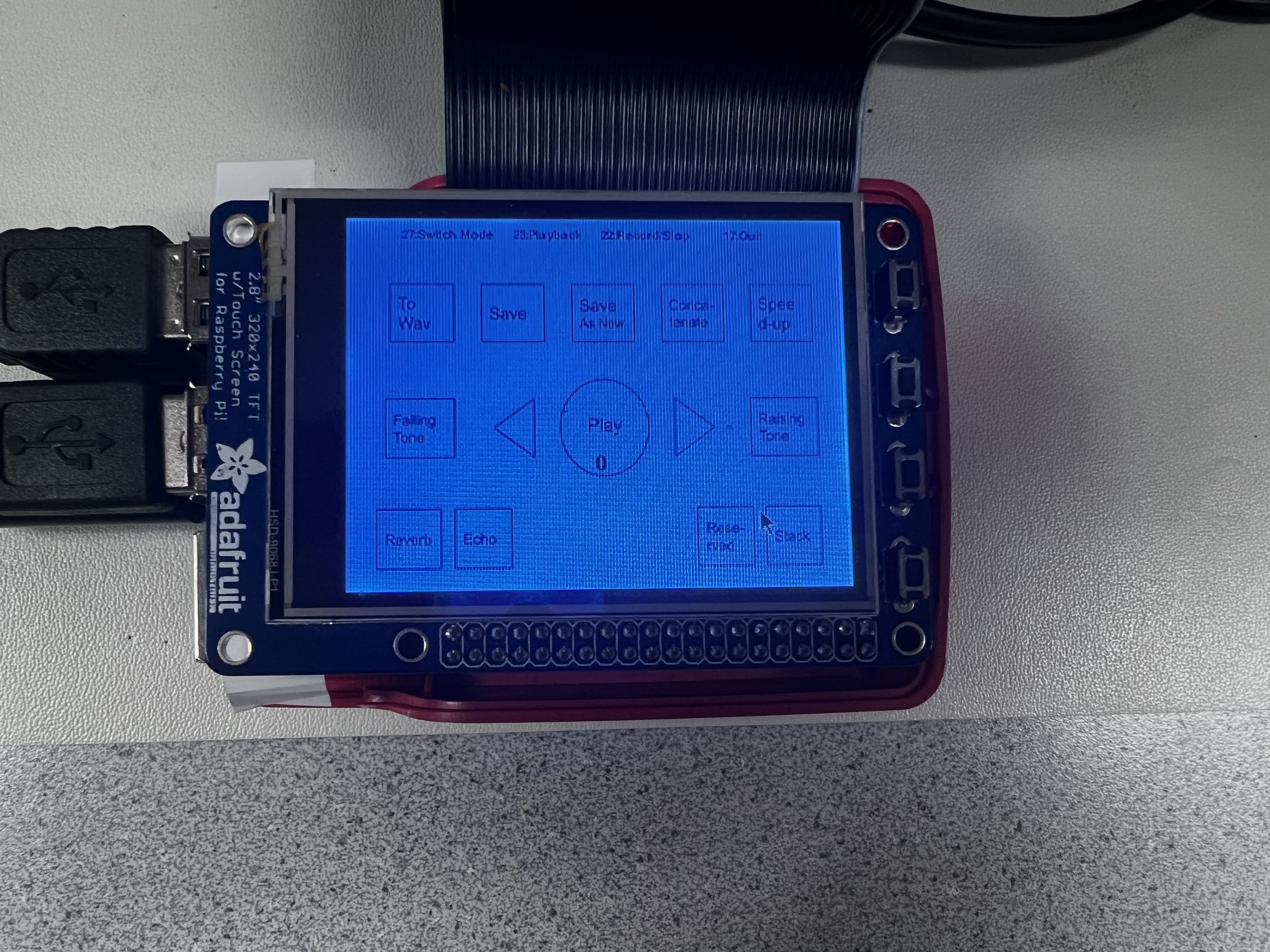

There are some basic system functions on digital keyboards and drums. There is a one-button record function on the GPIO pin to activate and deactivate recording. Records will be saved in Python and can be accessed on a GUI (shown in Figure 4). The central play button will have the number of current tracks displayed and users can scroll through records saved via left and right arrows on screen. The number is limited to records saved in Python list, e.g. track will return to 1 when trying to go to the next track at the last track recorded.

We implemented basic digital music processing functions such as tone operations (falling and raising), concatenating multiple clips and adjusting the speed of the record. Tone operations are implemented via shifting the frequencies by a certain amount in range 27.5 Hz (A0) to 4186 Hz (C8) [1]. Since the system will record each tone with the duration and time between each tone, we will be able to adjust the speed of the recording by a certain amount. To concatenate different records, we are reordering the Python list of arrays storing the recording information to form a new list. All operations above can be saved to the original clip or saved to a new clip via saving options.

Figure 4. The GUI display of the device.

Figure 5. The MIDI Keyboard and USB microphone input.

To support more advanced music operations and merging the operations with MIDI keyboard and microphone input (shown in Figure 5), we provide the function to convert a digital music clip stored in Python format to wav file format in ‘.wav’. After converting to wav files, we will be able to apply advanced modifications such as reverbing and echoing to the waveform. We first create a new sound sample by adding zeros in front of the original sound sample in wav files. Then, we created another new sound sample by adding zeros at the end of the original sound sample in wav files. The number of zeros is based on the reverb amplitude and sampling frequency. Then, we can get the reverb sound sample by concatenating these two new sound samples and modifying it with the reverb amplitude (used to be a number between 0 and 1). So, we can get the reverb version of the original wav file by writing the reverb sound sample into a new wav file. Echoing was implemented in a similar way, but the difference is that we will perform convolution to these two new sound samples instead of concatenation. Besides advanced wave operations, we are able to stack two waveforms on top of each other so clips from digital piano, MIDI keyboard, drums and human voice could be stacked on top of each other so users can produce a sound using the system built on top of RPi.

The major problems encountered in the PyGame implementation of the music synthesizer are error procedures when the user does not follow the manual. We have to create multiple call_back routine and protection statements to prevent Python from crashing once an invalid state is entered. For example, when a user is trying to access a clip that has not finished recording yet, the system will forbid any editing operations until the user terminates the recording. There are also restrictions related to the edit of clips stored in Python structure and stored in wav format. We use statements to filter out the type of the object until we do further processing of the clip.

To record duration and gap of tones, we kept an internal timer once recording started. We tried to record the duration from Pi-TFT input of finger down to finger up. However, the touch-sensitive screen is not sensitive enough to record the exact duration even if we set bouncing time. Instead of trying to measure the duration of finger inputs, we set up a very limited duration of each tone (several ms) so that when users put their finger down, an estimate of tone duration could be measured with multiple cycles of tone played. After the release of the finger, the timer will start until the user puts their finger down again either on a different tone or the same one. The gap time between tones could be measured by subtracting the two timers.

User Manual

a. Physical Buttons

17: Physical Quit button - exit the system at any tim

22: Record Start/Stop button - Hit one time to start record, hit again to stop and save the record

23: Playback button - Play the most recent record at any menu

27: Switching button - Push to switch different menus, there are 5 menus.

b. Menus

1. Welcome Menu: Welcome information contains physical button information and menu information.

2. Virtual Drum set : There are 8 drums in total.

3. Virtual Piano: An octave of the piano (7 white keys + 5 black keys) in tone E4 to D5 with pure tones.

4. USB Microphone & Piano Recording (shown in Figure 6): Select the duration of the record on screen and begin to record. Information will be displayed once the recording is finished and stored into a wav file.

5. Editor (GUI): Function menu to edit clips you just made. See details in c.

c. Editor (GUI)

1. Digital music file processing

To Wav - convert digital music clip to wav file.

Save - save and overwrite current clip with modified clip information.

Save As New - save and create a new clip at the end with modified clip information.

2. Concatenation and Stack

Concatenate - Select the current clip into the concatenation list by touch concatenate button. Touch again to deselect the current clip. To concatenate the list, use save or save as new. To stack the list, all clips will have to be converted into wav files and see details in Stack.

3. Digital music tone processing

Speedup - Increase the playback speed of clip by a factor of two

Falling tone - Decrease the tone of clip by one piano key frequency

Raising tone - Increase the tone of clip by one piano key frequency

4. Wav clip processing

Notice: Microphone input and MIDI keyboard input are by default stored in wav format. No need to convert!

Reverb- Creates reverbing sound effect to current wav clip.

Echo - Creates echoing sound effect to current wav clip.

Stack - Stack the current concatenation list on top of each other. Each clip is aligned at the beginning and the length of the new clip will be the length of the longest clip.

Reserved - Reserved for further implementation

Result and Conclusion

We successfully completed the design introduced in our proposal. We even refined our design in the initial proposal by adding more functions. In summary, our Pi Music Synthesizer has the following features:

1. The device has multiple input and instrument sources including a virtual drum set, a virtual piano, a physical piano MIDI keyboard, and a USB microphone input.

2. The device works for multiple users simultaneously. For example, one user can sing using the USB microphone, the second user can play the drum on PiTFT, and the third user can play the MIDI piano keyboard.

3. Each track can be recorded and edited individually to have a better recording experience. Usually when recording songs, beats, instruments, and vocals are recorded in different tracks. Then, the final production would be mixing all the tracks. We can do the same thing using our device. All the instruments and vocals can be recorded in different tracks individually. Then, we can mix all the tracks together.

4. There are various functions and sound effects in our device. Users can play, record, edit, synthesize, raise tone, speed up beats, and perform reverb and echo using our device. And all the work can also be stored in the embedded system for future review.

Overall, we learned a lot about Linux, embedded systems, and program development not only during this final project, but also during the whole semester in ECE 5725. This final project gives us a great opportunity to build something we like using the knowledge we learned in ECE 5725 lectures. The experience in this course will definitely help us in the future when developing embedded systems.

Team

Zifu Qin

zq72@cornell.edu

Designed the input & instrument resources and sound effects.

Zekun Chen

zc277@cornell.edu

Designed the recording and editing functions.

Parts List

- Raspberry Pi Model 4B - Provided in Lab

- Pi-TFT - Provided in Lab

- External Speaker - Provided in lab

- Used MIDI Keyboard - $14.20 (including tax and shipping)

- USB microphone - $15.11 (including tax and shipping)

Total: $29.31

References

[1] Aconnect[2] Arecord

[3] FluidSynth

[4] Piano Key Frequency

[5] Pyaudio Module

[6] Pydub Module

[7] Pygame Module

Code Appendix

Final_code_zq72_zc277.py:

# Spring 2022 ECE 5725 Final Project: Pi Music Synthesizer (Music Synthesier built upon RPi and Pi-TFT)

# Author: zc277 Zekun Chen zq72 Zifu Qin

# Python Library used:

# pyaudio, pygame, pydub, scipy

import pyaudio

import numpy as np

import time

import RPi.GPIO as GPIO

import subprocess

import os

import pygame

from pygame.locals import *

import wave

from pydub import AudioSegment

from scipy.io.wavfile import write

import scipy.io.wavfile

############################## Sound Generation Functions using PyAudio ##############################################

###### Drum effect implemented with pre-defined drum sounds stored in wav file ######

def playdrum(drumnum):

chunk = 1024

#open a wav format music

path = "/home/pi/Project/drumtone/"+drumnum

# f = wave.open(r"/home/pi/Project/drumtone/Rear_Center.wav","rb")

f = wave.open(path,"rb")

#open stream

stream = p.open(format = p.get_format_from_width(f.getsampwidth()),

channels = f.getnchannels(),

rate = f.getframerate(),

output = True)

#read data

data = f.readframes(chunk)

#play stream

while data:

stream.write(data)

data = f.readframes(chunk)

#stop stream

stream.stop_stream()

stream.close()

###### Tone effect implemented with frequencies and sin functions in np.array format ######

def playsound(v,fsam,d,freq):

# generate samples, note conversion to float32 array

samples = (np.sin(2*np.pi*np.arange(fsam*d)*freq/fsam)).astype(np.float32)

# samples = D4

# for paFloat32 sample values must be in range [-1.0, 1.0]

stream = p.open(format=pyaudio.paFloat32,

channels=1,

rate=fsam,

output=True)

# play. May repeat with different volume values (if done interactively)

# stream.write(v*samples)

stream.write(samples)

stream.stop_stream()

stream.close()

############################## Menu update Functions using Pygame ##############################################

##### 1. Welcome Menu

def fillmainmenu():

size = width, height = 320, 240

BLACK = 0, 0, 0

WHITE = 255, 255, 255

screen = pygame.display.set_mode(size)

screen.fill(BLACK) # Erase the Work space

my_font = pygame.font.Font(None, 12)

my_buttons = {'27:Switch Mode':(64,10) ,'23:Playback':(128,10) ,'22:Record/Stop':(192,10), '17:Quit':(256,10),'Main':(160,200)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, WHITE)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

##### 2. Drum Sets

def filldrum():

size = width, height = 320, 240

BLACK = 0, 0, 0

WHITE = 255, 255, 255

screen = pygame.display.set_mode(size)

ball = pygame.image.load("drum.png")

screen.fill(BLACK) # Erase the Work space

screen.blit(ball, (0,0))

my_font = pygame.font.Font(None, 12)

my_buttons = {'27:Switch Mode':(64,10) ,'23:Playback':(128,10) ,'22:Record/Stop':(192,10), '17:Quit':(256,10), 'Drum':(160,200)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, WHITE)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

##### 3. Digital Piano (Tones)

def fillpiano():

size = width, height = 320, 240

BLACK = 0, 0, 0

WHITE = 255, 255, 255

screen = pygame.display.set_mode(size)

ball = pygame.image.load("piano.jpg")

screen.fill(BLACK) # Erase the Work space

screen.blit(ball, (0,0))

my_font = pygame.font.Font(None, 12)

my_buttons = {'27:Switch Mode':(64,10) ,'23:Playback':(128,10) ,'22:Record/Stop':(192,10), '17:Quit':(256,10), 'Piano':(160,200)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, WHITE)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

##### 4. Recording Functions

def fillmicrophone(infod):

size = width, height = 320, 240

BLACK = 0, 0, 0

WHITE = 255, 255, 255

screen = pygame.display.set_mode(size)

screen.fill(BLACK) # Erase the Work space

my_font = pygame.font.Font(None, 12)

my_buttons = {'27:Switch Mode':(64,10) ,'23:Playback':(128,10) ,'22:Record/Stop':(192,10), '17:Quit':(256,10)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, WHITE)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

my_font = pygame.font.Font(None, 20)

my_buttons = {'Record 3s':(80,160), 'Record 5s':(160,160),'Record 7s':(240,160), infod:(160,200),'Piano 3s':(80,80), 'Piano 5s':(160,80),'Piano 7s':(240,80)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, WHITE)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

##### 5. Editing Functions

def fillwhat(c,infod):

size = width, height = 320, 240

BLACK = 0, 0, 0

WHITE = 255, 255, 255

screen = pygame.display.set_mode(size)

ball = pygame.image.load("background.jpg")

screen.fill(BLACK) # Erase the Work space

screen.blit(ball, (0,0))

my_font = pygame.font.Font(None, 12)

my_buttons = {'27:Switch Mode':(64,10) ,'23:Playback':(128,10) ,'22:Record/Stop':(192,10), '17:Quit':(256,10)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, BLACK)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

my_font = pygame.font.Font(None, 20)

# my_buttons = {'Previous':(64,140) ,'Next':(256,140) ,str(c):(160,160), 'PLAY':(160,140), infod:(160,200)}

my_buttons = {str(c):(160,160), infod:(160,200)}

for my_text, text_pos in my_buttons.items():

text_surface = my_font.render(my_text, True, BLACK)

rect = text_surface.get_rect(center=text_pos)

screen.blit(text_surface, rect)

pygame.display.flip()

############################## Setting Up GPIO pins and Configure Audio output ##############################################

GPIO.setmode(GPIO.BCM) # Set for broadcom numbering not board numbers...

GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(22, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(23, GPIO.IN, pull_up_down=GPIO.PUD_UP)

GPIO.setup(27, GPIO.IN, pull_up_down=GPIO.PUD_UP)

os.system("aconnect 20:0 128:0")

os.putenv('SDL_VIDEODRIVER', 'fbcon') # Display on piTFT

os.putenv('SDL_FBDEV', '/dev/fb1')

os.putenv('SDL_MOUSEDRV', 'TSLIB') # Track mouse clicks on piTFT

os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen')

pygame.init()

# os.system("arecordmidi -p 20:0 test3.midi")

# os.system("playmidi -p 128.:0 test3.midi")

# os.system("arecord -D plughw:4,0 -d "+recordtime+" test7.wav")

# os.system("aplay test.wav")

p = pyaudio.PyAudio()

volume = 0.5 # range [0.0, 1.0]

fs = 44100 # sampling rate, Hz, must be integer

duration = 1 # in seconds, may be float

f = 440.0 # sine frequency, Hz, may be float

start = time.time()

############################## Initialize Variables ##############################################

MODE = 0

change = 0

# 0:main menu

# 1:drum

# 2:piano

# 3:record

# 4:editor

RECORD = False

recordlist = []

recordedpattern = []

currentrecord = 0

infodisplay = ""

newrecord = []

Concatenate = []

WAVE = False

# startrecord = 0

i=0

############################## Polling loop ##############################################

while True:

# Fill the screen based on states

if change == 0:

fillmainmenu()

change = -1

elif change == 1:

filldrum()

change = -1

elif change == 2:

fillpiano()

change = -1

elif change == 3:

fillmicrophone(infodisplay)

change = -1

elif change == 4:

fillwhat(currentrecord,infodisplay)

change = -1

# Get touchscreen cooridinates

for event in pygame.event.get():

if(event.type is MOUSEBUTTONDOWN):

pos = pygame.mouse.get_pos()

elif(event.type is MOUSEBUTTONUP):

pos = pygame.mouse.get_pos()

x,y = pos

# print("touch coordinates:", x,y)

my_touch = "touch coordinates:" + str(x)+", "+str(y)

############################## MODE = 1 Drum sets ##############################################

if MODE == 1:

currenttime=time.time()

if y>120:

if x<80:

playdrum("drumt4.wav")

if RECORD:

recordedpattern.append((-1,"drumt4.wav",currenttime - startrecord))

startrecord = currenttime

elif x<160:

playdrum("drumt1.wav")

if RECORD:

recordedpattern.append((-1,"drumt1.wav",currenttime - startrecord))

startrecord = currenttime

elif x<240:

playdrum("drumt1.wav")

if RECORD:

recordedpattern.append((-1,"drumt1.wav",currenttime - startrecord))

startrecord = currenttime

elif x<320:

playdrum("drumt4.wav")

if RECORD:

recordedpattern.append((-1,"drumt4.wav",currenttime - startrecord))

startrecord = currenttime

elif y<120:

if x<80:

playdrum("drumb1.wav")

if RECORD:

recordedpattern.append((-1,"drumb1.wav",currenttime - startrecord))

startrecord = currenttime

elif x<160:

playdrum("drumb2.wav")

if RECORD:

recordedpattern.append((-1,"drumb2.wav",currenttime - startrecord))

startrecord = currenttime

elif x<240:

playdrum("drumb3.wav")

if RECORD:

recordedpattern.append((-1,"drumb3.wav",currenttime - startrecord))

startrecord = currenttime

elif x<320:

playdrum("drumb3.wav")

if RECORD:

recordedpattern.append((-1,"drumb3.wav",currenttime - startrecord))

startrecord = currenttime

############################## MODE = 2 Digital Tones ##############################################

if MODE == 2:

currenttime=time.time()

if y<120: # White Keys

if x<56:

playsound(volume,fs,1,185)

print("playsound F3#")

if RECORD:

recordedpattern.append((1,185,currenttime - startrecord))

startrecord = currenttime

elif x<102:

playsound(volume,fs,1,207.65)

print("playsound G3#")

if RECORD:

recordedpattern.append((1,207.65,currenttime - startrecord))

startrecord = currenttime

elif x<160:

playsound(volume,fs,1,233.08)

print("playsound A3#")

if RECORD:

recordedpattern.append((1,233.08,currenttime - startrecord))

startrecord = currenttime

elif x<243:

playsound(volume,fs,1,277.18)

print("playsound C4#")

if RECORD:

recordedpattern.append((1,277.18,currenttime - startrecord))

startrecord = currenttime

elif x<320:

playsound(volume,fs,1,311.13)

print("playsound D4#")

if RECORD:

recordedpattern.append((1,311.13,currenttime - startrecord))

startrecord = currenttime

elif y>120: #black keys

if x<46:

playsound(volume,fs,1,174.61)

print("playsound F3")

if RECORD:

recordedpattern.append((1,174.61,currenttime - startrecord))

startrecord = currenttime

elif x<92:

playsound(volume,fs,1,196)

print("playsound G3")

if RECORD:

recordedpattern.append((1,196,currenttime - startrecord))

startrecord = currenttime

elif x<138:

playsound(volume,fs,1,220)

print("playsound A3")

if RECORD:

recordedpattern.append((1,220,currenttime - startrecord))

startrecord = currenttime

elif x<184:

playsound(volume,fs,1,246.94)

print("playsound B3")

if RECORD:

recordedpattern.append((1,246.94,currenttime - startrecord))

startrecord = currenttime

elif x<230:

playsound(volume,fs,1,261.63)

print("playsound C4")

if RECORD:

recordedpattern.append((1,261.63,currenttime - startrecord))

startrecord = currenttime

elif x<276:

playsound(volume,fs,1,293.66)

print("playsound D4")

if RECORD:

recordedpattern.append((1,293.66,currenttime - startrecord))

startrecord = currenttime

elif x<320:

playsound(volume,fs,1,329.63)

print("playsound E4")

if RECORD:

recordedpattern.append((1,329.63,currenttime - startrecord))

startrecord = currenttime

############################## MODE = 3 Recording External Sources ##############################################

if MODE == 3:

if y<90: # Record MIDI keyboard

if x < 120:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 3 "+recordname)

infodisplay = "Saved 3 seconds of voice to record" +str(len(recordlist) - 1)

elif x < 240:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 5 "+recordname)

infodisplay = "Saved 5 seconds of voice to record" +str(len(recordlist) - 1)

else:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 7 "+recordname)

infodisplay = "Saved 7 seconds of voice to record" +str(len(recordlist) - 1)

change = 3

elif y>90: # Record Microphone

if x < 120:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 3 "+recordname)

infodisplay = "Saved 3 seconds of voice to record" +str(len(recordlist) - 1)

elif x < 240:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 5 "+recordname)

infodisplay = "Saved 5 seconds of voice to record" +str(len(recordlist) - 1)

else:

if not RECORD:

recordname = "test"+str(len(recordlist))+".wav"

recordlist.append(recordname)

os.system("arecord -D plughw:2,0 -d 7 "+recordname)

infodisplay = "Saved 7 seconds of voice to record" +str(len(recordlist) - 1)

change = 3

############################## MODE = 4 Editor ##############################################

if MODE == 4:

if y>200: ########## Wav Operations ##########

if x >250: ##### Stacking wav

if len(Concatenate) != 0 and not RECORD and WAVE:

combined = AudioSegment.from_wav("test"+str(Concatenate[0])+".wav")

for i in range(len(Concatenate)-1):

combined = combined.overlay(AudioSegment.from_wav("test"+str(Concatenate[i+1])+".wav"))

combined.export("test"+str(len(recordlist))+".wav", format="wav")

recordlist.append("test"+str(len(recordlist))+".wav")

infodisplay = "stack on top of each other to record " + str(len(recordlist)-1)

change = 4

Concatenate = []

elif x>190: ##### Concatenate wav

if len(Concatenate) != 0 and not RECORD and WAVE:

combined = AudioSegment.from_wav("test"+str(Concatenate[0])+".wav")

for i in range(len(Concatenate)-1):

combined = combined + AudioSegment.from_wav("test"+str(Concatenate[i+1])+".wav")

combined.export("test"+str(len(recordlist))+".wav", format="wav")

recordlist.append("test"+str(len(recordlist))+".wav")

infodisplay = "concatenated into one piece to record "+str(len(recordlist)-1)

change = 4

Concatenate = []

elif x<50: ##### Reverb

echo_time = 0.2

delay_amp = 0.5

fs, sound_int16 = scipy.io.wavfile.read("test"+str(currentrecord)+".wav")

sound_sig = sound_int16.astype(float)/2**15

# sound_sig = sound_int16/2**15

delay = round(echo_time * fs)

blank = np.zeros(delay)

# sound_sig = np.reshape(sound_sig, (-1,2))

print(sound_sig.shape)

# B = np.reshape(blank, (-1, 2))

B = blank

print(B.shape)

delayed_version = np.concatenate((B, sound_sig))

sound_new = np.concatenate((sound_sig, B))

sum_sounds = 150*(sound_new + delay_amp* delayed_version)

scipy.io.wavfile.write("test"+str(len(recordlist))+".wav", fs, sum_sounds.astype(np.float32))

recordlist.append("test"+str(len(recordlist))+".wav")

infodisplay = "reverbed the tone to record " +str(len(recordlist)-1)

change = 4

elif x < 100: ##### Echo

infodisplay = "echoed the tone"

echo_time = 0.2

delay_amp = 0.5

fs, sound_int16 = scipy.io.wavfile.read("test"+str(currentrecord)+".wav")

sound_sig = sound_int16.astype(float)/2**15

delay = round(echo_time * fs)

impulse_response = np.zeros(delay)

impulse_response[0] = 1

impulse_response[-1] = delay_amp

H = sound_sig.flatten()

output_sig = 150*np.convolve(H, impulse_response)

scipy.io.wavfile.write("test"+str(len(recordlist))+".wav",fs,output_sig.astype(np.float32))

recordlist.append("test"+str(len(recordlist))+".wav")

infodisplay = "echoed the tone to record " +str(len(recordlist)-1)

change = 4

elif y>90: ########## Selecting Clips and change tone ##########

if x<50: ##### Falling tone

print("falling tone")

infodisplay = "falling tone by 1"

if not RECORD and not isinstance(recordlist[currentrecord], str):

newrecord = []

recordedpattern = recordlist[currentrecord]

for i in range(len(recordedpattern)):

if recordedpattern[i][0] != -1:

newrecord.append( (recordedpattern[i][0], recordedpattern[i][1] - 32, recordedpattern[i][2]) )

else:

newrecord.append( recordedpattern[i] )

elif isinstance(recordlist[currentrecord], str):

infodisplay = "Could not change voice for now"

change = 4

elif x<100: ##### Previous Track

infodisplay = "Previous Track"

currentrecord -= 1

if currentrecord < 0:

currentrecord = len(recordlist) - 1

change = 4

elif x<220: ##### Play Current Track

infodisplay = "Playing Track"

fillwhat(currentrecord,infodisplay)

if not RECORD and not isinstance(recordlist[currentrecord], str):

recordedpattern = recordlist[currentrecord]

time.sleep(0.5)

for i in range(len(recordedpattern)):

if recordedpattern[i][0] == -1:

print("playing",recordedpattern[i][1])

playdrum(recordedpattern[i][1])

else:

playsound(volume,fs,recordedpattern[i][0],recordedpattern[i][1])

time.sleep(recordedpattern[i][2]/1.75)

elif isinstance(recordlist[currentrecord], str):

os.system("aplay "+recordlist[currentrecord])

infodisplay = "Played" + recordlist[currentrecord]

change = 4

time.sleep(0.5)

elif x<270: ##### Next Track

infodisplay = "Next Track"

currentrecord += 1

if currentrecord >= len(recordlist):

currentrecord = 0

change = 4

else: ##### Raising Tone

print("raising tone")

infodisplay = "raising tone by 1"

if not RECORD and not isinstance(recordlist[currentrecord], str):

newrecord = []

recordedpattern = recordlist[currentrecord]

for i in range(len(recordedpattern)):

if recordedpattern[i][0] != -1:

newrecord.append( (recordedpattern[i][0], recordedpattern[i][1] + 32, recordedpattern[i][2]) )

else:

newrecord.append( recordedpattern[i] )

elif isinstance(recordlist[currentrecord], str):

infodisplay = "Could not change voice for now"

change = 4

elif y<90: ########## Digital Record Operations ##########

if x<64: ##### Converting list of arrays to wav format

infodisplay = "Finished converting to wav"

change = 4

if isinstance(recordlist[currentrecord], str) or RECORD:

infodisplay = "Already in wave format"

elif not isinstance(recordlist[currentrecord], str): ##### Concatenate Tone pieces into waveform

recordedpattern = recordlist[currentrecord]

combined = AudioSegment.from_wav("empty.wav")

for i in range(len(recordedpattern)):

if recordedpattern[i][0] == -1:

combined += AudioSegment.from_wav("drumtone/"+recordedpattern[i][1])

else:

# playsound(volume,fs,recordedpattern[i][0],recordedpattern[i][1])

samples = (np.sin(2*np.pi*np.arange(fs*recordedpattern[i][0])*recordedpattern[i][1]/fs)).astype(np.float32)

write("example.wav",fs,samples.astype(np.float32))

combined += AudioSegment.from_wav("example.wav")

# time.sleep(recordedpattern[i][2]/1.75)

samples = (np.sin(2*np.pi*np.arange(fs*recordedpattern[i][2]/1.75)*1/fs)).astype(np.float32)

write("example.wav",fs,samples.astype(np.float32))

combined += AudioSegment.from_wav("example.wav")

combined.export("test"+str(currentrecord)+".wav", format="wav")

recordlist[currentrecord] = "test"+str(currentrecord)+".wav"

elif x<128: ##### Overwrite the original Clip with modified elements

infodisplay = "Saved to original Clip"

if len(Concatenate) != 0 and not RECORD and not WAVE: ## Concatenate into a large clip

conrecord = []

for i in range(len(Concatenate)):

conrecord += recordlist[Concatenate[i]]

recordlist[currentrecord] = conrecord

if len(Concatenate) != 0 and not RECORD and WAVE: ## Concatenate wave file

combined = AudioSegment.from_wav("test"+str(Concatenate[0])+".wav")

for i in range(len(Concatenate)-1):

combined += AudioSegment.from_wav("test"+str(Concatenate[i+1])+".wav")

combined.export("test"+str(currentrecord)+".wav", format="wav")

recordlist[currentrecord] = "test"+str(currentrecord)+".wav"

if len(newrecord) != 0 and not RECORD:

recordlist[currentrecord] = newrecord

if len(newrecord) == 0 and len(Concatenate) == 0:

infodisplay = "Cannot save empty record"

Concatenate = []

change = 4

elif x<192: ##### Save the modified content to a new clip

infodisplay = "Saved to New Clip"

if len(Concatenate) != 0 and not RECORD and not WAVE: ## Concatenate into a large clip

conrecord = []

for i in range(len(Concatenate)):

conrecord += recordlist[Concatenate[i]]

recordlist.append(conrecord)

if len(Concatenate) != 0 and not RECORD and WAVE: ## Concatenate wave file

combined = AudioSegment.from_wav("test"+str(Concatenate[0])+".wav")

for i in range(len(Concatenate)-1):

combined += AudioSegment.from_wav("test"+str(Concatenate[i+1])+".wav")

combined.export("test"+str(len(recordlist))+".wav", format="wav")

recordlist.append("test"+str(len(recordlist))+".wav")

if len(newrecord) != 0 and not RECORD:

recordlist.append(newrecord)

if len(newrecord) == 0 and len(Concatenate) == 0:

infodisplay = "Cannot save empty record"

Concatenate = []

change = 4

elif x<256: ##### Select clips for concatenation

if not RECORD and not isinstance(recordlist[currentrecord], str):

Concatenate.append(currentrecord)

infodisplay = "Concatenate digital" + str(Concatenate)

WAVE = False

elif isinstance(recordlist[currentrecord], str):

Concatenate.append(currentrecord)

infodisplay = "Concatenate wav file" + str(Concatenate)

WAVE = True

change = 4

else: ##### Speedup Clips by shrinking the gap

infodisplay = "Speedup"

if not RECORD and not isinstance(recordlist[currentrecord], str):

newrecord = []

recordedpattern = recordlist[currentrecord]

for i in range(len(recordedpattern)):

newrecord.append( (recordedpattern[i][0], recordedpattern[i][1], recordedpattern[i][2]/2) )

elif isinstance(recordlist[currentrecord], str):

infodisplay = "Could not change voice for now"

change = 4

############################## GPIO pins callback ##############################################

if ( not GPIO.input(17) ): ##### Hard Quit button

print (" ")

print ("Button 17 pressed....")

break

time.sleep(0.5)

if ( not GPIO.input(22) ): ##### Start/Stop Record

print (" ")

print ("Button 22 pressed....")

if RECORD:

recordlist.append(recordedpattern)

recordedpattern = []

RECORD = not RECORD

recordedpattern = []

time.sleep(0.5)

startrecord = time.time()

if ( not GPIO.input(23) ): ##### Playback the most current record

print (" ")

print ("Button 23 pressed....")

if not RECORD:

recordedpattern = recordlist[0]

time.sleep(0.5)

for i in range(len(recordedpattern)):

if recordedpattern[i][0] == -1:

playdrum(recordedpattern[i][1])

else:

playsound(volume,fs,recordedpattern[i][0],recordedpattern[i][1])

time.sleep(recordedpattern[i][2]/1.75)

time.sleep(0.5)

if ( not GPIO.input(27) ): ##### Switch to the next menus

MODE += 1

if MODE > 4:

MODE = 0

change = MODE

print (" ")

print ("Button 27 pressed....")

time.sleep(0.5)

end = time.time()

p.terminate()

print("time is", end - start)